Research Summary

I’m a machine learning scientist with a background in physics. I am currently working on generative models for small molecule drug discovery at Variational AI.

My PhD research at UC Berkeley was focused on learning how supermassive black holes have evolved and interacted with their host galaxies over cosmic time. In particular, I was interested in the dynamics of stars within these galaxies, what stellar motions can tell us about the distribution of mass within these galaxies, and how computational and machine learning methods can be used to improve these inferences.

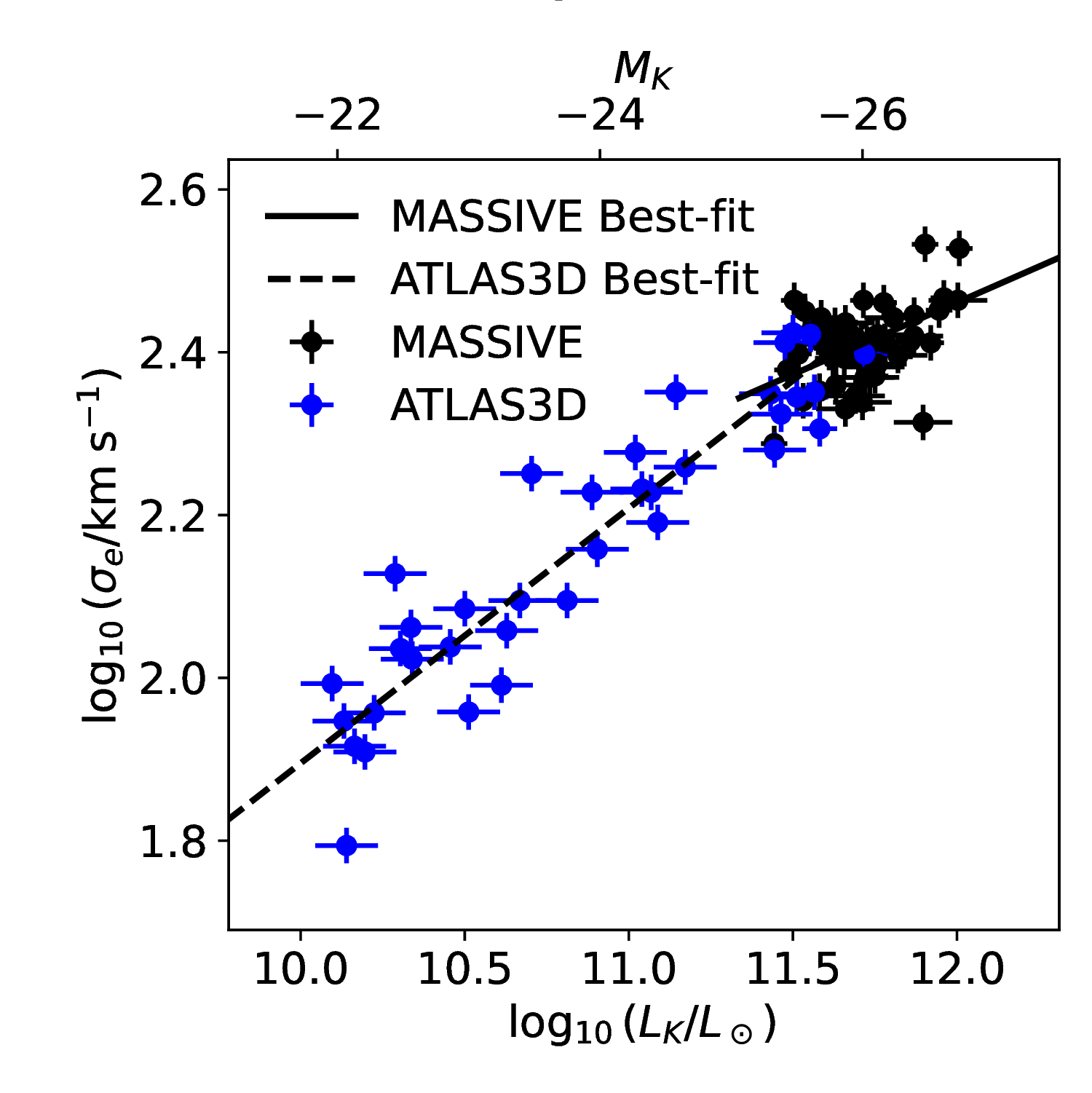

Image processing and galaxy scaling relations

Quenneville et al. 2024, MNRAS stad3137

Key topics:

Image Processing, Bayesian Inference, Linear Regression, Galaxy Formation

By measuring the present-day properties of galaxy populations, we can make inferences about how they formed. To do this, we need accurate measurements of galaxy size and total luminosity. This requires sensitive galaxy images with a wide field-of-view, together with precise image processing. In this paper, we acquire and process such images for a volume and luminosity limited sample of nearby galaxies. We use the resulting size and luminosity measurements to model the relationship among the most luminous galaxies. We find that the properties of these galaxies exhibit distinct scaling relationships from lower mass galaxies, suggesting that these galaxies have distinct formation mechanisms.

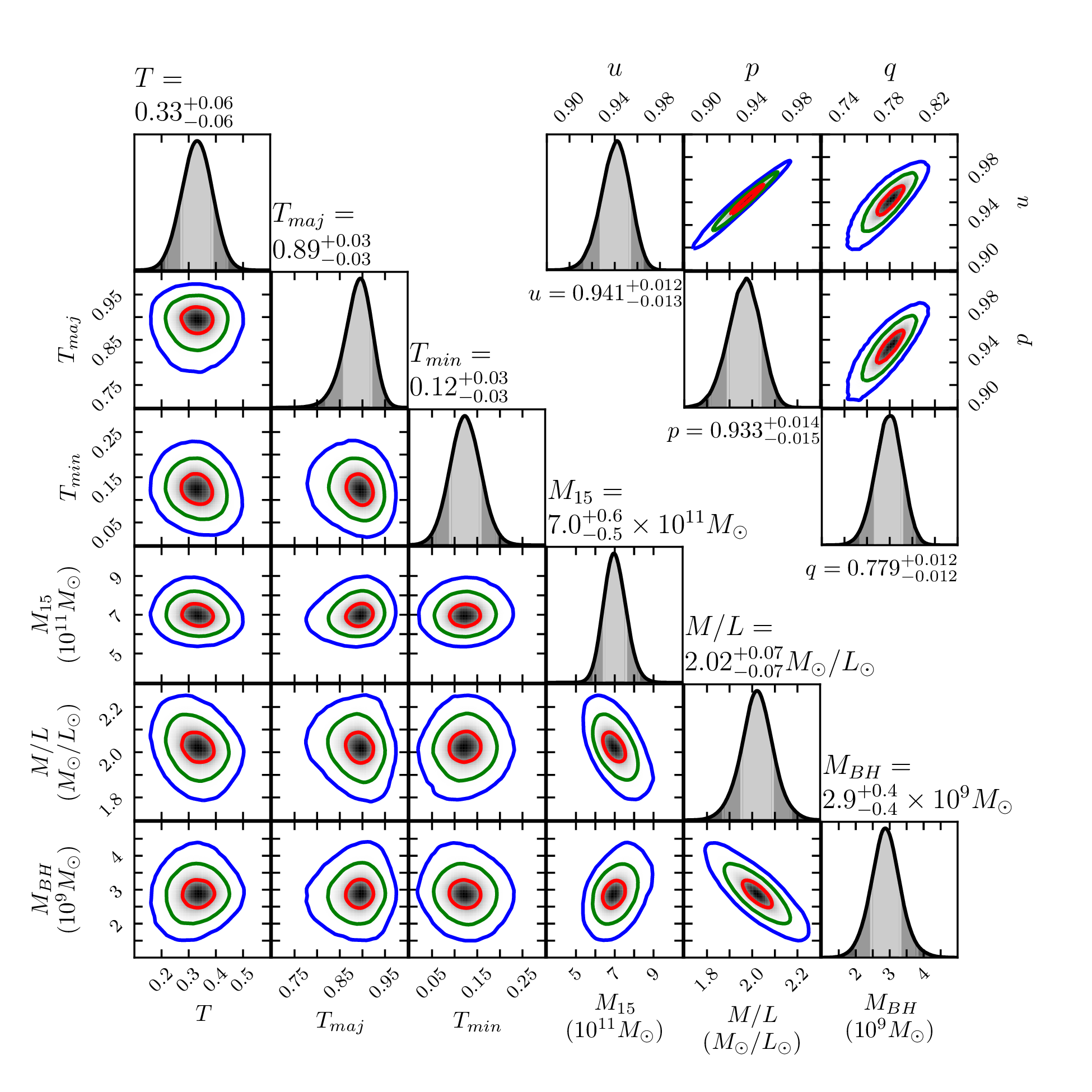

Surrogate optimization for triaxial orbit modelling

Quenneville et al. 2022, ApJ 926 (1), 30

Key topics:

Gaussian Process Regression, 3D Reconstruction, Optimization, Surrogate Models

Many real galaxies show clear deviations from axisymmetry. This suggests that triaxial modelling is needed to accurately model their behaviour. Previous studies have shown that some black hole masses have changed drastically when triaxial models are used instead of axisymmetric models. In this paper, we identify and resolve several issues that affected previous triaxial black hole measurements. We also describe and implement a model search scheme based on a gaussian process regression surrogate model that fairly samples different regions of the triaxial shape space and requires drastically fewer models, making the search much more computationally efficient. We use these results to perform a triaxial measurement of the black hole in the center of NGC 1453.

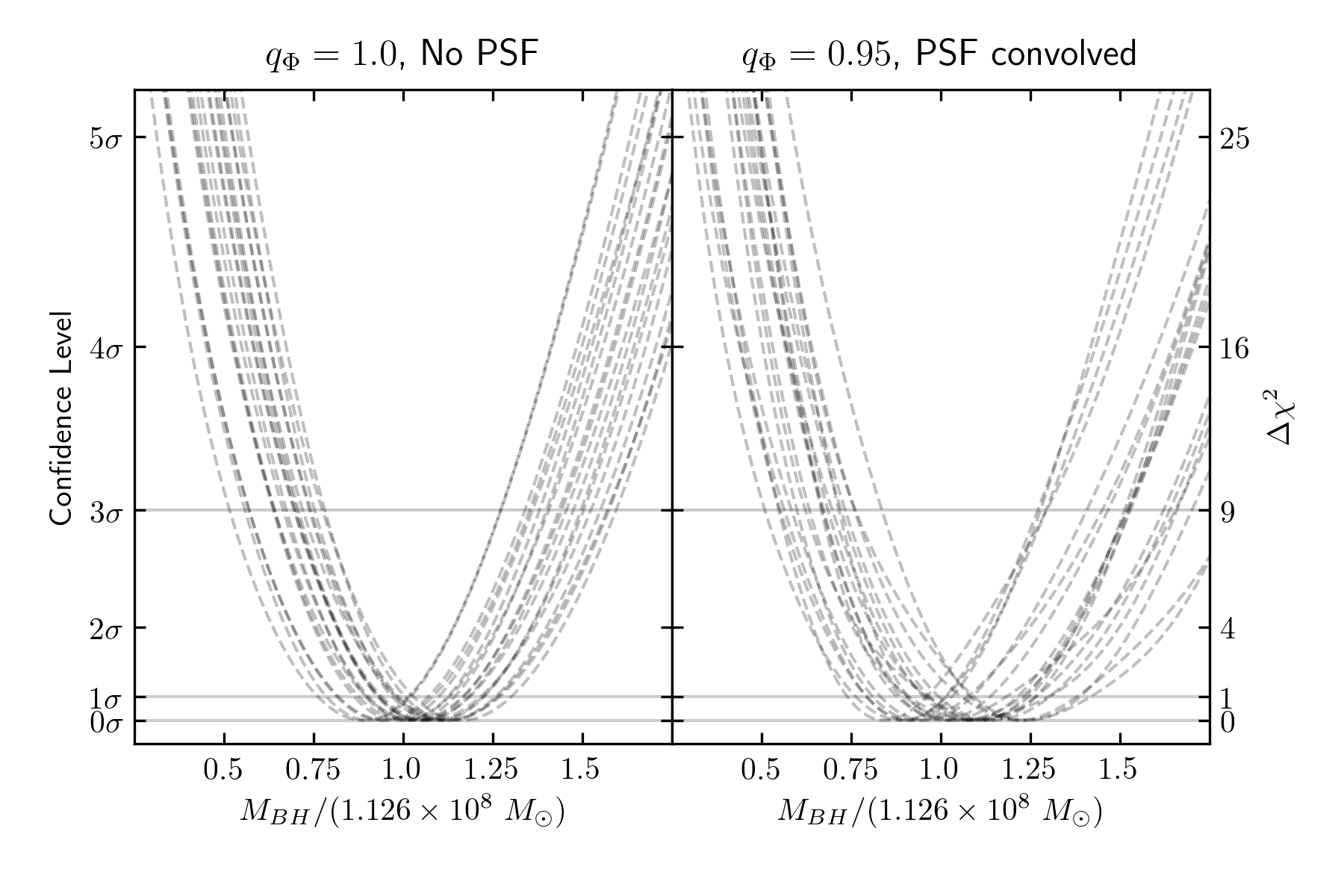

Model validation with simulated axisymmetric galaxies

Quenneville et al. 2021, ApJS 254 (2), 25

Key topics:

Simulated data, Model validation, Orbital Dynamics, Supermassive black holes

Fully triaxial modelling leads to many complexities that aren’t present for axisymmetric models. Because of this, triaxial modelling codes are sometimes used to perform “nearly” axisymmetric modelling. However, some previous papers have shown significant inconsistencies by doing this. In this paper, we show how to effectively use a triaxial orbit code in the axisymmetric limit. We also show that we can accurately recover the central black hole mass in mock data.

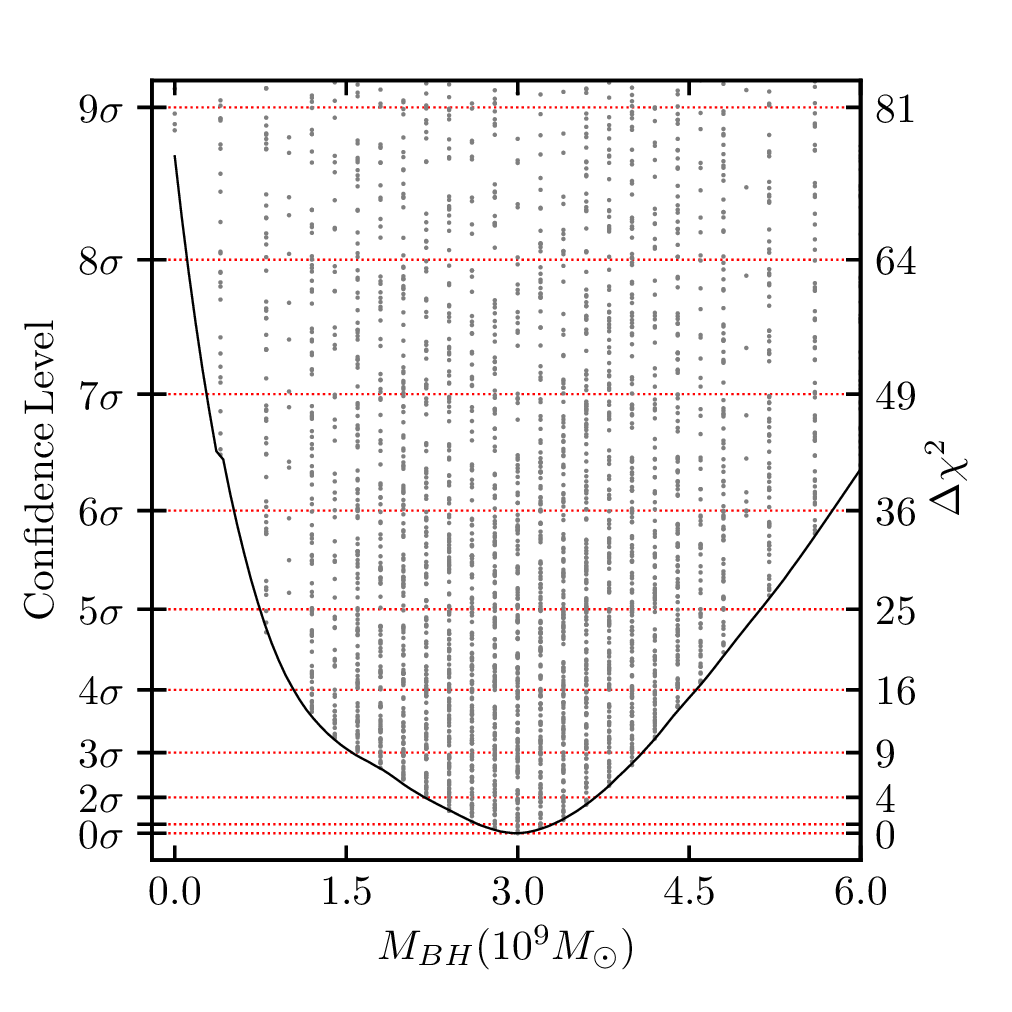

The central black hole in NGC 1453

Liepold, Quenneville, et al. 2020, ApJ 891 (1), 4

Key topics:

Hyperspectral Image Processing, Inverse Problems, Supermassive black holes

NGC 1453 is a nearby massive elliptical galaxy. It rotates quickly and the rotation appears to be nearly aligned with its projected minor axis. This makes it a natural candidate for axisymmetric modelling. We processed hyperspectral images of NGC 1453 in order to measure stellar kinematics. We then performed axisymmetric modelling of NGC 1453 and found a central black hole about 3 billion times more massive than the sun. We also found that regularizing constraints are needed in order to make the model’s kinematics realistic.

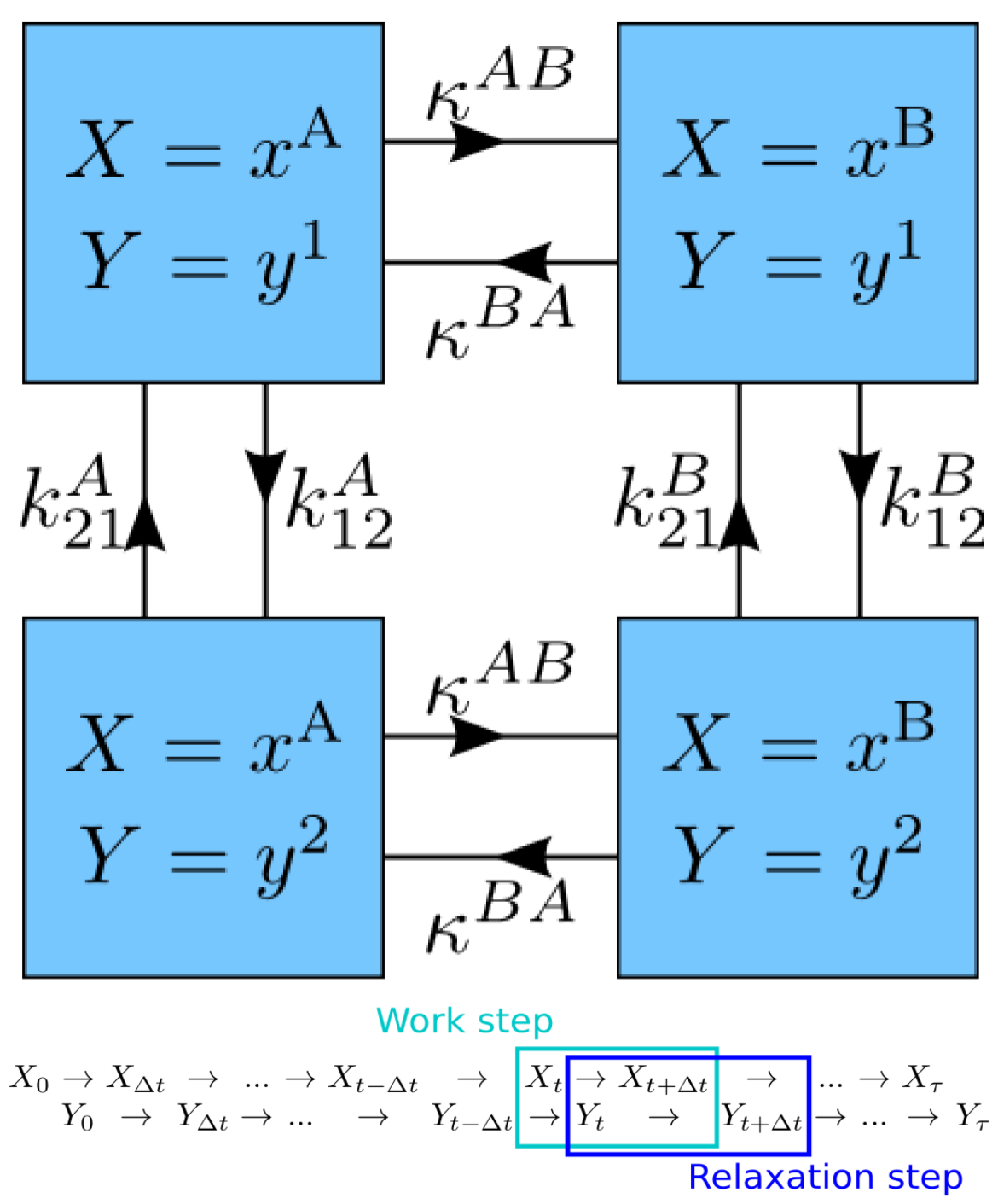

Model prediction and energetic efficiency

Quenneville & Sivak 2018, Entropy 20 (9), 707

Key topics:

Information Theory, Complexity, Thermodynamic Efficiency

When a system evolves stochastically under the influence of its environment, the system state become correlated with that of the environment. If the environment exhibits temporal correlations, the system state will also be correlated with future environment state, acting as an implicit predictive model of the environment. The complexity of this model can be quantified through the amount of mutual information between the system and environment that is not predictive of future environment states. This measure of model complexity acts as a lower bound on the thermodynamic heat dissipation, relating model information efficiency and thermodynamic efficiency. In this paper, we explore how this relationship plays out for a simple two-state system within a two-state environment and find a lower-bound on the rate at which the system can learn about its environment in the steady-state limit.

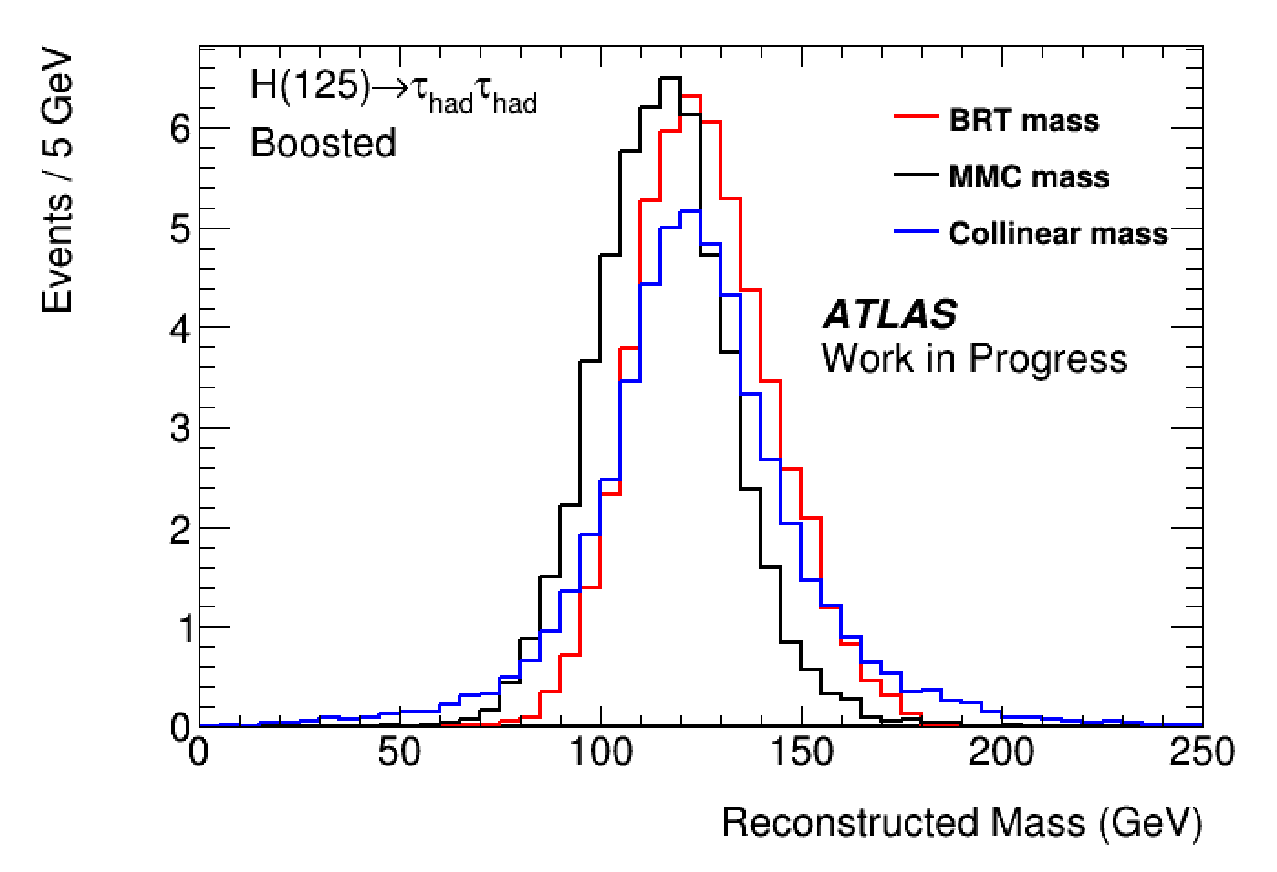

Boosted tree models for particle physics

Quenneville 2014, CERN Internal Note (not peer-reviewed)

Key topics:

Boosting, Decision Trees, Regression, Model Validation, Particle Physics

When a Higgs boson decays into a pair of tau leptons, multiple neutrinos are among the final decay products. These particles are extremely weakly interacting, and pass through detectors without being detected. Thus, the mass of the original Higgs boson is not possible to reconstruct directly from the observed decay products. However, statistical inferences can be made based on the observed decay products. In this report, we describe the use of boosted tree models to reconstruct the Higgs boson mass. This model achieves comparable accuracy to existing models, while requiring a tiny fraction of the computation time.